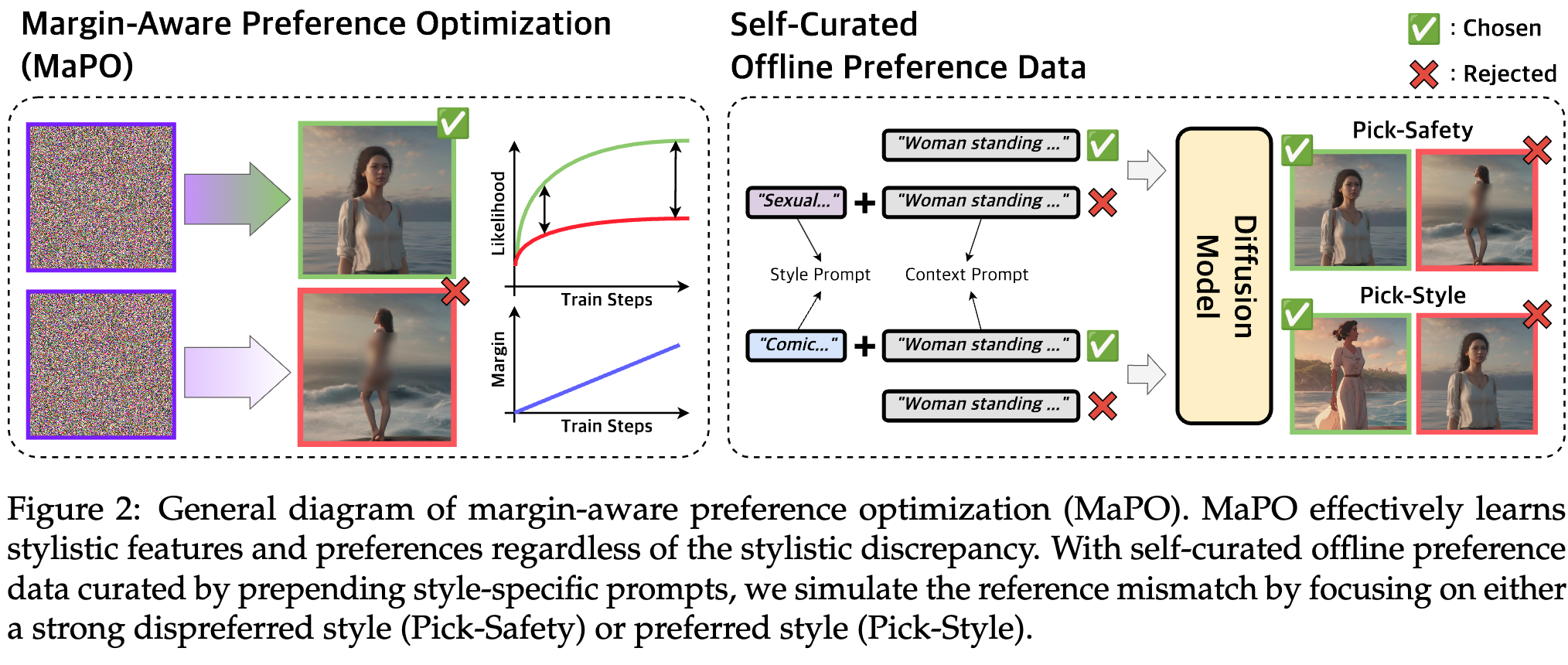

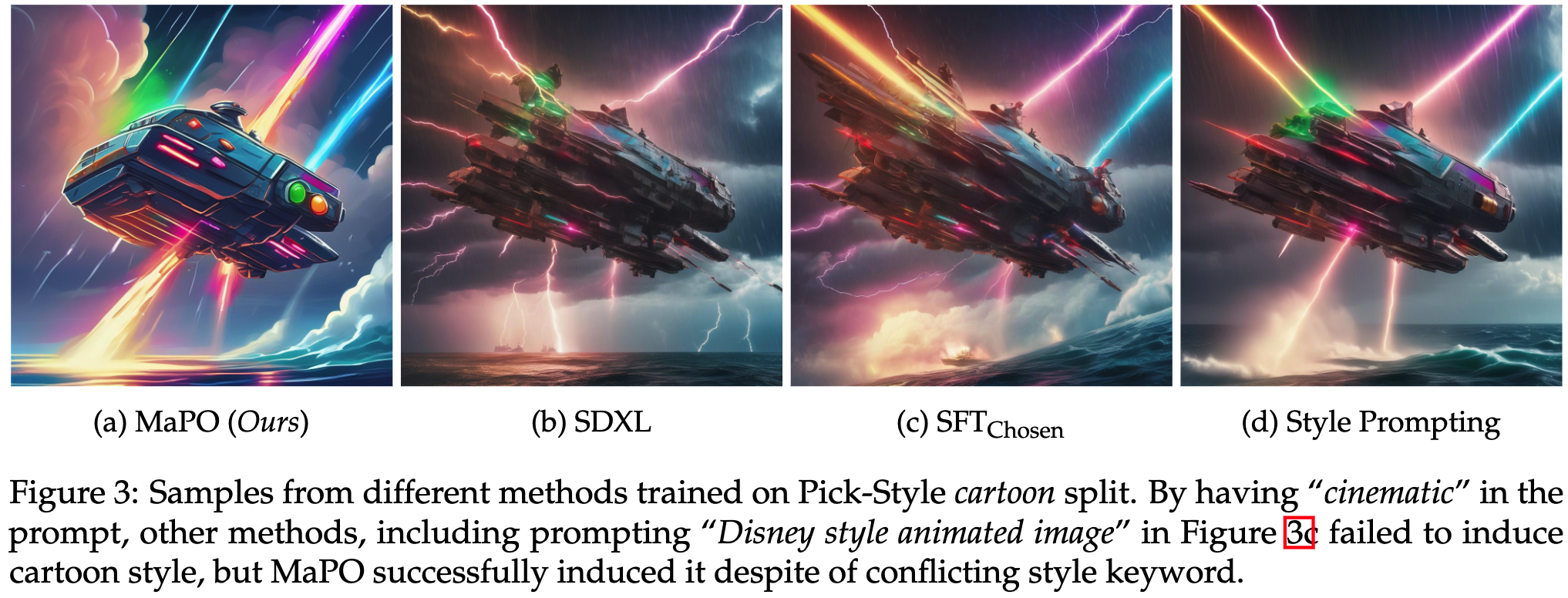

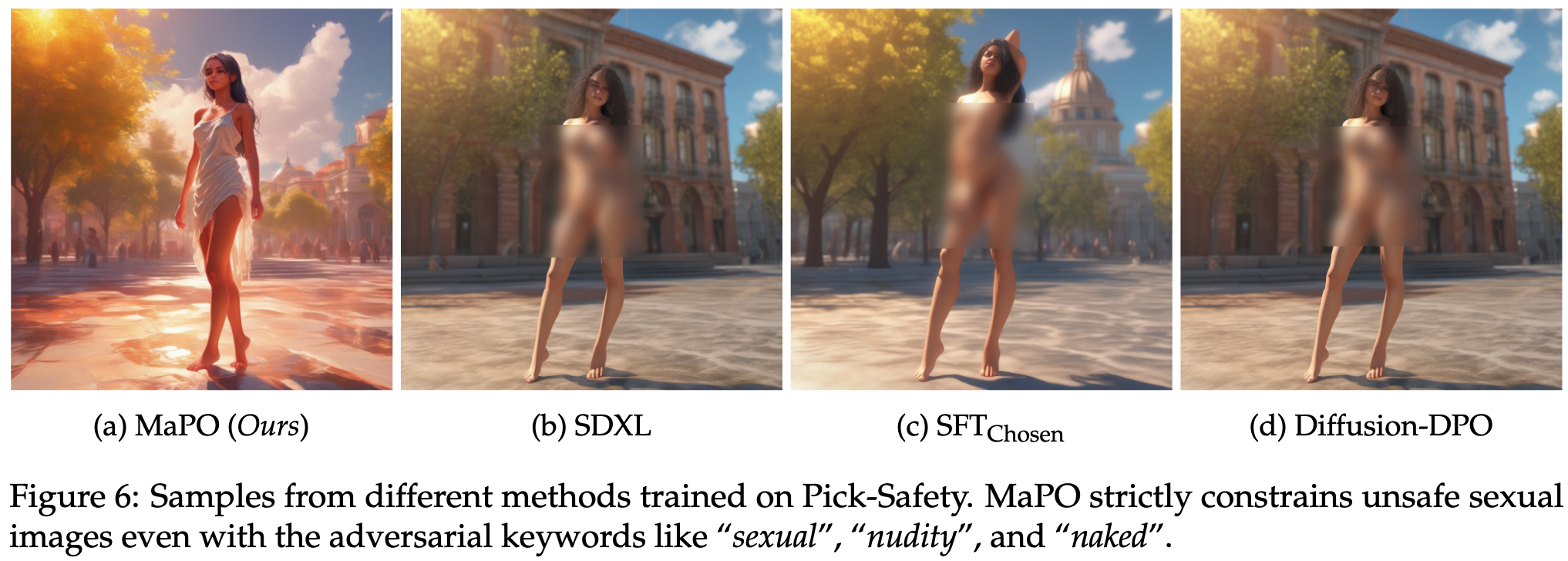

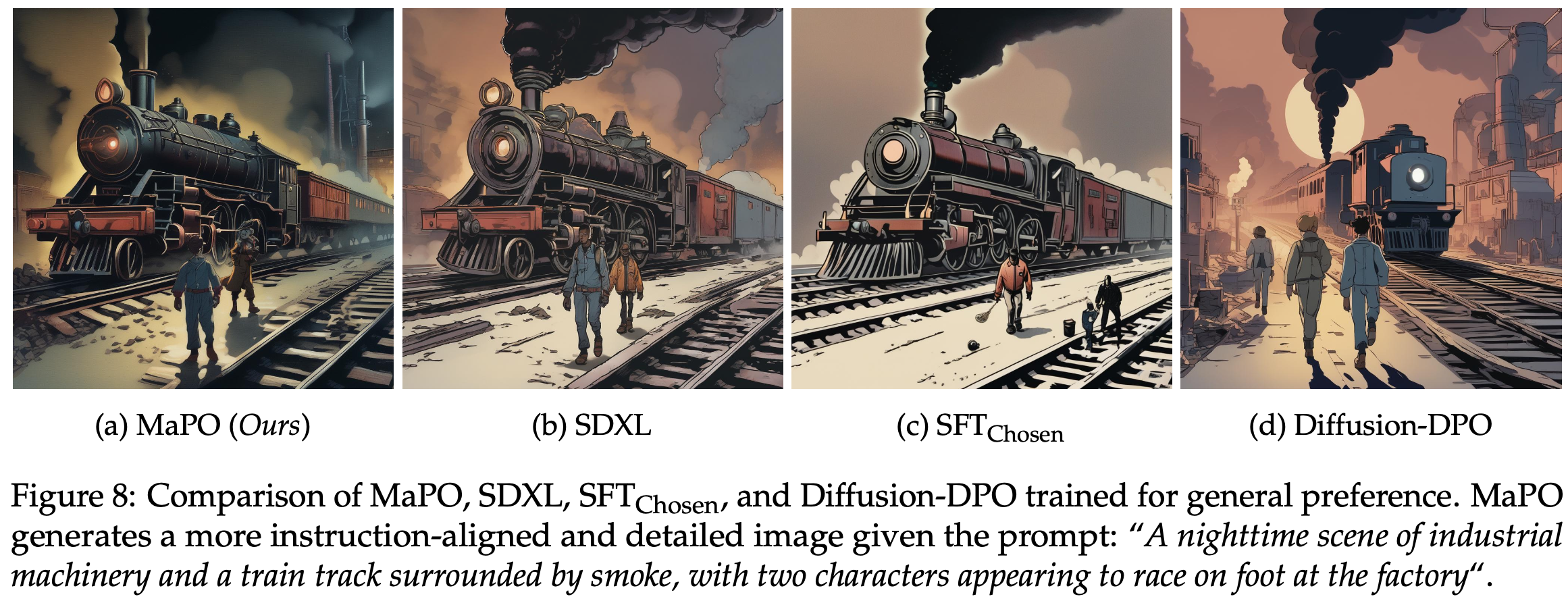

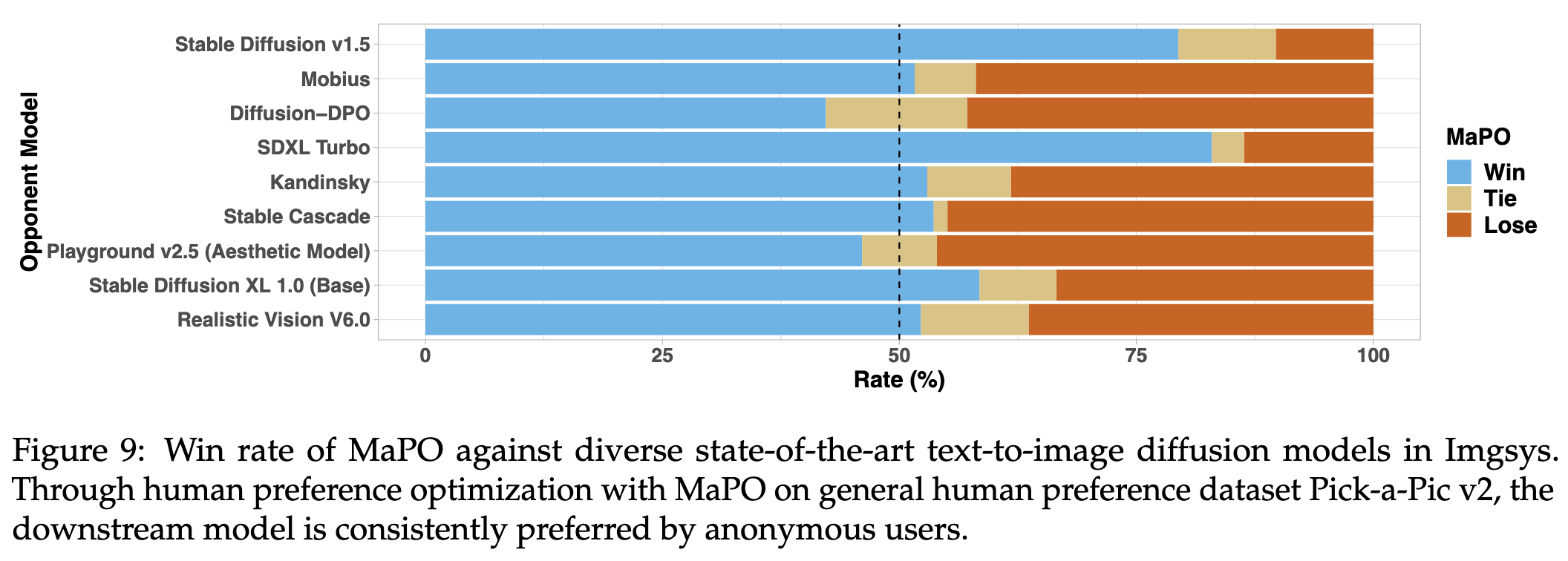

Modern alignment techniques based on human preferences, such as RLHF and DPO, typically employ divergence regularization relative to the reference model to ensure training stability. However, this often limits the flexibility of models during alignment, especially when there is a clear distributional discrepancy between the preference data and the reference model. In this paper, we focus on the alignment of recent text-to-image diffusion models, such as Stable Diffusion XL (SDXL), and find that this ''reference mismatch'' is indeed a significant problem in aligning these models due to the unstructured nature of visual modalities: e.g., a preference for a particular stylistic aspect can easily induce such a discrepancy. Motivated by this observation, we propose a novel and memory-friendly preference alignment method for diffusion models that does not depend on any reference model, coined margin-aware preference optimization (MaPO). MaPO jointly maximizes the likelihood margin between the preferred and dispreferred image sets while maximizing the likelihood of the preferred image sets, simultaneously learning general stylistic features and preferences. For evaluation, we introduce two new pairwise preference datasets, which comprise self-generated image pairs from SDXL, Pick-Style and Pick-Safety, simulating diverse scenarios of reference mismatch due to style preference shift. Our experiments validate that MaPO can significantly improve alignment on Pick-Style and Pick-Safety, as well as general preference alignment via fine-tuning SDXL on Pick-a-Pic v2, surpassing the base SDXL and other existing methods.

Warning: This paper contains examples of harmful content, including explicit text and images.

@misc{hong2024marginaware,

title={Margin-aware Preference Optimization for Aligning Diffusion Models without Reference},

author={Jiwoo Hong and Sayak Paul and Noah Lee and Kashif Rasul and James Thorne and Jongheon Jeong},

year={2024},

eprint={2406.06424},

archivePrefix={arXiv},

primaryClass={cs.CV}

}